Project Overview: Monitoring and Alerting System

This project is focused on implementing a monitoring and alerting system to track the status of various components within a web application and its underlying infrastructure. The system ensures that if specific issues arise, such as website downtime, virtual machine (VM) failures, service crashes, or resource exhaustion, the user will be immediately notified via email.

The use cases and scenarios that this system will monitor and alert on are:

Website Downtime:

- If the website goes down or becomes unreachable, the system will notify the user within a few minutes.

Service Failure on Virtual Machine:

- If any service (e.g., NGINX, Jenkins) running on a virtual machine crashes or is stopped, the system will send an immediate alert.

Virtual Machine Downtime:

- If the entire virtual machine goes down or crashes, a notification will be sent to the user.

Resource Exhaustion:

- If there is no storage left, insufficient memory, or CPU overload on the virtual machine, the system will generate an alert.

The overall goal is to ensure that the user is notified of any critical issue affecting the website or its infrastructure, enabling rapid resolution.

Components Involved

The system is built using the following key components:

Blackbox Exporter:

Purpose: Used to monitor the website’s availability and response.

Functionality: Scrapes metrics related to website uptime, such as checking whether the website is accessible.

Node Exporter:

Purpose: Used to monitor virtual machine metrics.

Functionality: Scrapes metrics such as CPU usage, memory usage, and disk storage of the virtual machine to track resource consumption and performance.

Prometheus:

Purpose: Serves as the data collection and analysis tool.

Functionality: Receives the metrics from both the Blackbox Exporter and Node Exporter, storing them for analysis and monitoring.

Alertmanager:

Purpose: Manages alert notifications.

Functionality: Based on predefined alert rules, it sends notifications (e.g., via email) to the user when specific conditions are met, such as downtime or resource exhaustion.

Key Features: Alertmanager is configured to trigger alerts only after certain conditions are met (e.g., if a website is down for more than 1 minute, or if a virtual machine is unresponsive for a specified duration).

Workflow

Data Collection:

The Blackbox Exporter monitors the website, checking its availability by scraping metrics on whether the website is accessible or not.

The Node Exporter monitors the virtual machine, collecting metrics related to CPU, memory, storage, and other system resources.

Metrics Forwarding:

- These metrics are forwarded to Prometheus, which stores and analyzes the data.

Alerting:

Alertmanager is configured with alert rules, which specify conditions under which an alert should be triggered (e.g., if the website is down for 1 minute, or if the virtual machine’s CPU usage exceeds a threshold).

If any condition is met, Alertmanager sends a notification to the user’s Gmail address.

Implementation

The system will be implemented using the following architecture:

Virtual Machine 1 (VM): Hosts the Prometheus, Blackbox Exporter, and Alertmanager components.

Virtual Machine 2 (Monitoring-Tool): The monitored virtual machine running the web application and services like NGINX or Jenkins.

The setup will be tested in real-time to ensure that all scenarios are effectively monitored and alerts are sent promptly.

Step 1: Provision two VM’s with the specified configuration details.

AMI: ubuntu

Instance type:t2 medium

make sure that port 22,25,80,443,587,6443,30000-32767,465,27017,3000-10000 are opened

storage: 20 GiB

Step 2: On Monitoring-Tool Virtual Server setup (Prometheus, Alertmanager, Blackbox Exporter)

Prometheus

Download Prometheus

wget https://github.com/prometheus/prometheus/releases/download/v2.52.0/prometheus-2.52.0.linux-amd64.tar.gzExtract Prometheus

tar -xvf prometheus-2.52.0.linux-amd64.tar.gz # after extracting you can remove the zip file rm prometheus-2.52.0.linux-amd64.tar.gz #rename the file so that it will become easier to find mv prometheus-2.52.0.linux-amd64/ prometheusStart Prometheus

cd prometheus/ ./prometheus &By default Prometheus is running on 9090

Now, lets setup the alert rules

--- groups: - name: alert_rules rules: - alert: InstanceDown expr: up == 0 for: 1m labels: severity: critical annotations: summary: Endpoint {{ $labels.instance }} down description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minute." - alert: WebsiteDown expr: probe_success == 0 for: 1m labels: severity: critical annotations: description: The website at {{ $labels.instance }} is down. summary: Website down - alert: HostOutOfMemory expr: node_memory_MemAvailable / node_memory_MemTotal * 100 < 25 for: 5m labels: severity: warning annotations: summary: Host out of memory (instance {{ $labels.instance }}) description: |- Node memory is filling up (< 25% left) VALUE = {{ $value }} LABELS: {{ $labels }} - alert: HostOutOfDiskSpace expr: (node_filesystem_avail{mountpoint="/"} * 100) / node_filesystem_size{mountpoint="/"} < 50 for: 1s labels: severity: warning annotations: summary: Host out of disk space (instance {{ $labels.instance }}) description: |- Disk is almost full (< 50% left) VALUE = {{ $value }} LABELS: {{ $labels }} - alert: HostHighCpuLoad expr: (sum by (instance) (irate(node_cpu{job="node_exporter_metrics",mode="idle"}[5m]))) > 80 for: 5m labels: severity: warning annotations: summary: Host high CPU load (instance {{ $labels.instance }}) description: |- CPU load is > 80% VALUE = {{ $value }} LABELS: {{ $labels }} - alert: ServiceUnavailable expr: up{job="node_exporter"} == 0 for: 2m labels: severity: critical annotations: summary: Service Unavailable (instance {{ $labels.instance }}) description: |- The service {{ $labels.job }} is not available VALUE = {{ $value }} LABELS: {{ $labels }} - alert: HighMemoryUsage expr: (node_memory_Active / node_memory_MemTotal) * 100 > 90 for: 10m labels: severity: critical annotations: summary: High Memory Usage (instance {{ $labels.instance }}) description: |- Memory usage is > 90% VALUE = {{ $value }} LABELS: {{ $labels }} - alert: FileSystemFull expr: (node_filesystem_avail / node_filesystem_size) * 100 < 10 for: 5m labels: severity: critical annotations: summary: File System Almost Full (instance {{ $labels.instance }}) description: |- File system has < 10% free space VALUE = {{ $value }} LABELS: {{ $labels }}now define this info in our prometheus.yaml

vim prometheus.ymlupdate the filename alert_rules.yml file

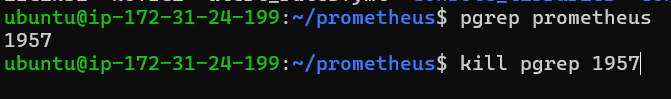

# restart the prometheus pgrep prometheus kill (id) ./prometheus &get the process id and kill the process id

Blackbox Exporter

Download Blackbox Exporter

wget https://github.com/prometheus/blackbox_exporter/releases/download/v0.25.0/blackbox_exporter-0.25.0.linux-amd64.tar.gzExtract Blackbox Exporter

tar xvfz blackbox_exporter-0.25.0.linux-amd64.tar.gz rm blackbox_exporter-0.25.0.linux-amd64.tar.gz mv blackbox_exporter-0.25.0.linux-amd64/ blackbox_exporterStart Blackbox Exporter

cd blackbox_exporter ./blackbox_exporter &

Alertmanager

Download Alertmanager

wget https://github.com/prometheus/alertmanager/releases/download/v0.27.0/alertmanager-0.27.0.linux-amd64.tar.gzExtract Alertmanager

tar xvfz alertmanager-0.27.0.linux-amd64.tar.gz rm alertmanager-0.27.0.linux-amd64.tar.gz mv alertmanager-0.27.0.linux-amd64/ alertmanagerStart Alertmanager

cd alertmanager ./alertmanager &

alertmanager is running on port 9093

access on public ip of monitoring-tool 54.242.56.31:9093

As we can see that there is no alert group is there so for that we need to restart Prometheus

# restart the prometheus pgrep prometheusget the process id and kill the process id

on prometheus server we can see that there are rules visible

Step 3 : Setup Node Exporter & Website on VM which is to be monitored

VM-1 (Node Exporter)

Download Node Exporter

wget https://github.com/prometheus/node_exporter/releases/download/v1.8.1/node_exporter-1.8.1.linux-amd64.tar.gzExtract Node Exporter

tar xvfz node_exporter-1.8.1.linux-amd64.tar.gz rm node_exporter-1.8.1.linux-amd64.tar.gz mv node_exporter-1.8.1.linux-amd64/ node_exporterStart Node Exporter

cd node_exporter ./node_exporter &

it is running on 9100

access node exporter using public ip address of vm with port 9100

Step 4 : On VM get the Application source code to run as website which we can monitor

https://github.com/msnehabawane/Boardgame.git

As we need to monitoring an application website running on the browser so for that need to build jar file execute the jar file so that we can access it for that we need java and maven

cd Boardgame/

sudo apt install openjdk-17-jre-headless

sudo apt install maven -y

mvn package

ls

cd target/

ls

# Build the jar file

java -jar database_service_project-0.0.7.jar

access the application on http://34.207.220.99:8080/

Step 5 : Scrape Configuration

we define the scrape configurations. As we know, both the Node Exporter and Blackbox Exporter are responsible for scraping various metrics. These metrics need to be collected and provided to Prometheus for storage and analysis. The configuration for these exporters ensures that Prometheus receives the required data to effectively monitor the system."

Prometheus

scrape_configs:

- job_name: "prometheus" # Job name for Prometheus

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"] # Target to scrape (Prometheus itself)

Node Exporter

- job_name: "node_exporter" # Job name for node exporter

static_configs:

- targets: ["34.207.220.99:9100"] # Target node exporter endpoint

Blackbox Exporter

- job_name: 'blackbox' # Job name for blackbox exporter

metrics_path: /probe # Path for blackbox probe

params:

module: [http_2xx] # Module to look for HTTP 200 response

static_configs:

- targets:

- http://prometheus.io # HTTP target

- https://prometheus.io # HTTPS target

- http://34.207.220.99:8080/ # HTTP target with port 8080

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 54.242.56.31:9115 # Blackbox exporter address

The system is now successfully retrieving the required information. After refreshing the status from the menus, the status of the targets should appear as 'Up' and will be highlighted in green. As you can see, all components are now showing as 'Up,' indicating that the Blackbox Exporter is properly connected. The monitoring setup is now complete and fully operational

Step 6 : Setup the configuration for receiving email notification

Navigate to the alertmanager and modify alertmanager.yml file

route:

group_by:

- alertname # Group by alert name

group_wait: 30s # Wait time before sending the first notification

group_interval: 5m # Interval between notifications

repeat_interval: 1h # Interval to resend notifications

receiver: email-notifications # Default receiver

receivers:

- name: email-notifications # Receiver name

email_configs:

- to: xyz@gmail.com # Email recipient

from: test@gmail.com # Email sender

smarthost: smtp.gmail.com:587 # SMTP server

auth_username: xyz@gmail.com # SMTP auth username

auth_identity: xyz@gmail.com # SMTP auth identity

auth_password: lfah ppsn cvdy jmje # SMTP auth password

send_resolved: true # Send notifications for resolved alerts

inhibit_rules:

- source_match:

severity: critical # Source alert severity

target_match:

severity: warning # Target alert severity

equal:

- alertname

- dev

- instance # Fields to match

You can navigate to the Alerts section, where you will see all the predefined rules that have been created.

Step 7: Website and Service Monitoring with Prometheus Configuring Alerts and Notifications

Now, let's proceed with testing. For the 'Website Down' alert, we can simulate a scenario where the website is running. I'll go ahead and click 'Center,' and then I'll stop the website.

on VM I will now use Ctrl + C to shut down the website, which means the website should be down.

We've successfully received the first notification, indicating that the website is down. The notification provides details, confirming that the website is currently unavailable. This means the first part of our test has been successfully completed.

Next, let's proceed with testing how Prometheus detects the issue. As we know, the Node Exporter service is running. In a typical production environment, each node used for Kubernetes or similar setups will have Node Exporter configured by default. This means Node Exporter will be continuously running 24/7. If the website goes down, Node Exporter will also go down. Prometheus will recognize this as an indicator that the virtual machine is offline.

Here we can see that the Instance is also down

And we received an email notification

Done!!

A Heartfelt Thanks to My Readers, Connections, and Supporters!

Let’s keep the momentum going – stay connected, stay inspired! 💡🚀

Stay tuned for my next blog. I will keep sharing my learnings and knowledge here with you.

Let's learn together! I appreciate any comments or suggestions you may have to improve my blog content.

Happy Learning !

Thank you,

Neha Bawane